As I advanced in my last post I will try to follow up my description of the Machine Learning family by focusing today on Machine Learning itself (the perfect family parent), and the promising family offspring (hybrid systems, and modern AI).

Machine Learning fields

Machine Learning started out as a way of investigating how to make computer algorithms adapt to data for analysis purposes. Hence “learning” refers to adaptation and automated knowledge extraction. I would say it includes all kinds of adaptive pattern recognition methodologies. And this adaptation can be done in three different ways, which define the main categories of methods you can find in Machine Learning: supervised, unsupervised, and reinforcement learning. All of them take the role of the tutor in a human learning process as the metaphor to establish the taxonomy.

Supervised learning (and its usage in EEG signal analysis)

Supervised learning refers to the usage of so-called ground truth. The ground truth is the golden standard to which the learning algorithm has to adapt. So the tutor in this case tells the student what to learn. The tutor is normally a human expert who labels the data examples to be categorized by the adaptive classifier. This is normally a very tedious process, whose difficulty might be overseen. For instance, in EEG automated data analysis we can think about the problem of computer-based spindle detection in sleep EEG. Spindles are very characteristic waveforms that appear in the EEG at a particular sleep stage. In this case Machine Learning could be applied to distinguish spindle waveforms from the remaining EEG signal. Hence, the first step for applying a supervised algorithm would be to take the EEG streams and label the spindles with a “1” (positive class), whereas the remaining signal samples are labeled as “0” (negative class). To show the difficulty of the labeling process I will tell you about a issue raised within tthe HIVE project works. One of the activities in this project aimed at discovering if non-invasive electrical stimulation of the brain could be used to modulate sleep. So we took data from different sleeping subjects and tried to discover some variations in the features usually used in sleep research to characterize sleep. In this context, 3 different experts were told to label samples belonging to spindles in the EEG signals, i.e. generating the ground truth I formerly mentioned. As you can imagine, there were some differences among them. That is why spindle categorization is usually done in sleep labs as a result of consensus among several experts, as was explained by Periklis Ktonas (our sleep expert) at one of the project’s meetings. This example illustrates how complex the labeling of data can become, particularly in EEG analysis. Nevertheless it might be given as an obvious consequence of the data acquisition protocol in some other cases like in Brain-Computer Interface (BCI) paradigms.

Supervised Learning methods include (to mention some) neural networks, and kernel machines. By the way, kernel-based methods and particularly support vector machines are the preferred ones in the machine learning research community (as well as in medical and clinical application fields). Furthermore, modern development in machine learning takes into account the combination of different supervised methods into so-called classifier ensembles. Here there are several classification procedures instead of the usual pattern recognition approach, where just one is taken into account. Classifier ensembles help by splitting a complex classification problem into several simpler ones. To illustrate this we can deepen a bit further in a well-known machine learning approach for BCI, namely for event detection in the classical spelling application. The approach described in Rakotomamonjy’s paper is based on an ensemble of support vector machines. In this approach each classifier is trained to classify a particular class in the training set, e.g. the first support vector machine is trained with the signals gathered when trying to spell ‘A’, the second SVM is trained to detect ‘B’, and so on. The last stage in such systems combines the multiple classification results through data fusion.

Unsupervised and Reinforcement Learning (and its application in EEG signal analysis)

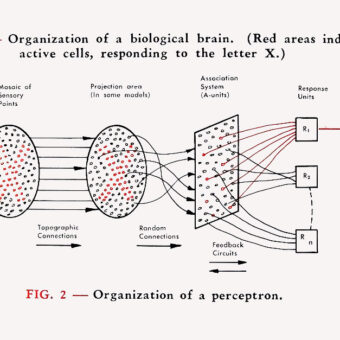

Unsupervised methods are characterized by not taking into account any ground truth in the classification. Hence, these methodologies split the data in homogeneous groups, where the homogeneity is usually characterized by a distance or proximity function, e.g. Euclidean, Mahalanobis distance. Data samples that are close enough are grouped together. Clustering approaches in different flavors, i.e. agglomerative, partitional, can be categorized as unsupervised approaches. Another type of such methods that I especially like are self-organizing approaches, e.g. Self-Organizing Maps, Adaptive Resonance Theory. These are unsupervised neural networks in the sense that they mimic some processing procedures in the brain. Some researchers also place Independent Component Analysis (ICA) and associated methodologies in such unsupervised classification approaches, but I prefer to see them as feature extraction methods. ICAs are extensively used in EEG signal analysis, e.g. for artefact rejection, Event-Related Potential decompositions, and therefore worth mentioning.

I do not know much about reinforcement learning since I have never used it in my work. So the reader is advised to go to more detailed texts on this. My general idea is that this type of learning combines both unsupervised and supervised concepts, although the supervision is implemented through a reward function and not through a ground truth label associated to the learning examples. The metaphor in this case is the perfect tutor, who guides the self-exploratory action of the student, and rewards her when the action is well done.

Artificial and Computational Intelligence current trend

Talking of the most modern research fields brings us to hybrid systems and modern artificial intelligence. While discussing with him once, AI expert Hector Geffner told me about the evolution of artificial intelligence from an exclusively top-down approach (as described in last week’s post) into a mixture of top-down and probabilistic approaches. This is an evolution like the one suffered by computational intelligence although in exactly the opposite direction. Bottom-up approaches, which are typical for traditional Computational Intelligence, are complemented with top-down and logical reasoning ones to become so-called hybrid intelligent systems. In both cases, researchers are working hard on capturing the best from both worlds in order to advance in the resolution of real-world problems, which is what ultimately matters.

photo credit: Spirit-Fire via photopin cc