In my last post I talked about how we could apply machine learning techniques to make a computer think. What I didn’t quite cover last time is one of the biggest challenges you’ll surely hit if you intend to achieve emotion detection: obtaining ground truth data.

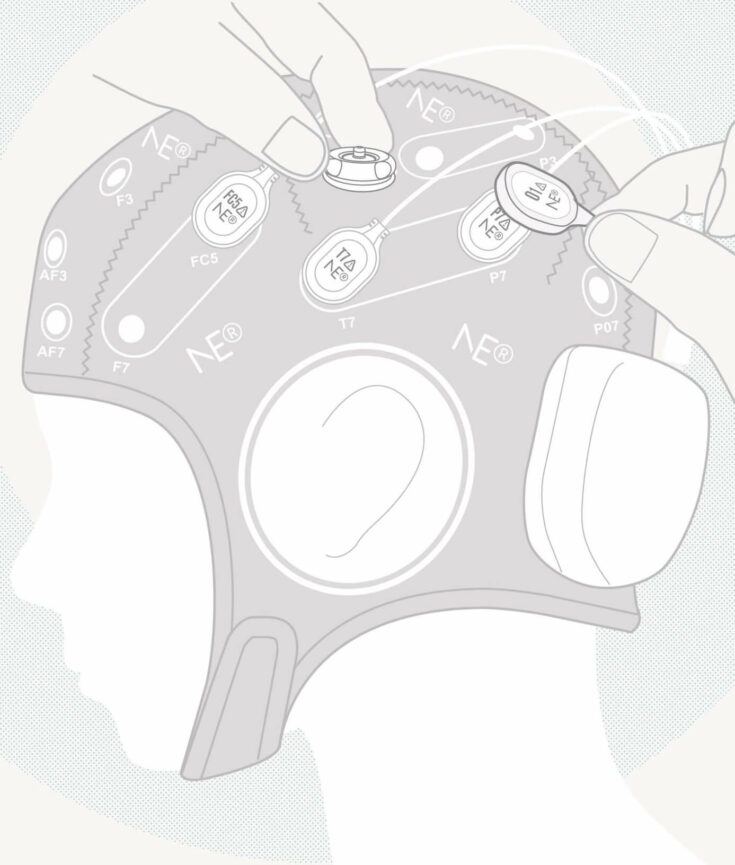

Let’s say you design a system that is meant to learn how to detect emotions. Most likely, if you use machine learning techniques, you’ll need input data corresponding to the emotions you want to teach your system. For example, if you want to train a system to detect happiness from EEG signals then you probably intend to use a supervised learning technique. Supervised techniques are those in which you teach the system by showing it examples of what it needs to learn. In this case, you’ll need to feed the training system EEG signals of a subject feeling happiness. Those examples, and those EEG signals in this particular case, are what is called ground truth data (GT from now on).

And here’s where you hit the bone of the problem: how do you obtain good quality GT that provides you a good training? In emotion recognition, the quality of the ground truth is determined by two aspects: How can you assure what the subject was feeling when the GT was recorded? And how can you assure ‘how much’ the subject was actually feeling it? If you want to generate you’re own GT, (that is, record it to later use it), you’ll need to address these two aspects.

In order to record your own GT for emotion detection, you’ll need to elicitate (provoke) some emotions in the subject. There are many different proposed protocols for emotion elicitation, using different kinds of stimuli to elicitate emotions on a subject. One of the most common kinds of stimuli is audiovisual, as movies are considered to be a good tool to elicite emotions in subjects. Images have also often been used to elicitate emotions, and the International Affective Picture System (IAPS) is a very well-known project to develop an international framework for emotion elicitation based on static pictures. The link between music and emotions has also inspired the use of music and audio stimuli to elicitate emotions.

Other strategies have been used to elicitate emations, besides audiovisual stimuli. Some works used memory recalling to elicite emotions: the subjects are asked to recall episodes of their memory that cause them a determined feeling. The conduction of prepared tasks has also been presented as an useful way to elicitate emotions. Guided imagery is a particular prepared task approach, in which the subject listens to a narrative that guides him or her into imagining a situation where a particular emotion would be elicitated. This approach has been succesfully used to elicite emotions.

Not particularly tied to a specific protocol or kind of stimuli, the opinion that social context plays an important role on how emotions are naturally elicited and experienced. The fact that emotions have a strong social component would affect the viability of emotion elicitation on ‘lab conditions’. Also, it would highlight the need to develop new protocols to be run out of the lab (in real scenarios) or, at least, protocols that incorporate the social context required for emotions to be ‘real’.

We didn’t go over the big question that rises once you elicited emotions into a subject: emotion assessment, or how to make sure the user was feeling what he or she was meant to. Due to space constraints, I will leave it here by recomending you a must-read book if you plan to design an emotion elicitation protocol: the ‘Handbook of emotion elicitation and assessment‘, by James A. Coan and John J.B. Allen.

In the next post I will explain a close example of an emotion elicitation design: the protocol we are running right now at Starlab to elicit emotions, using audiovisual stimuli in some parts, guided imagery on some others, and providing social context to all. Stay tuned, don’t miss it!