Fusion seems, at first sight, to be a complex issue. When we hear fusion we might first associate it with the physics concept of nuclear fusion or in a more mundane sense that of music fusion. In the former, two or more atoms are joined together to form a heavier atom, whereas two musical styles are combined in the latter. Data fusion has something of both: joining two or more “things” together, where, in this case, the things are data.

Where can we find data fusion?

With the advancement of sensor technologies engineers tend to integrate several sensors in a single system. These sensors generate data, and this data has to be combined at the processing stage. Or if you take the case of databases, at some point someone might like to extract useful information from the stored data by combining it. A further type of system where data fusion appears is that where several data streams are gathered simultaneously.

We can find examples of such systems in our daily life. Modern cars for instance have several distance sensors distributed along the back bumper. At a certain moment, hopefully when parking, the signals from the distance sensors have to be transformed into a distance estimation and reproduced in acoustic form in order to alert the driver. This alert is based on the result of a data fusion procedure.

Some medical EEG devices like Enobio are capable of gathering brain and heart activity, respectively known as EEG and ECG, through different electrodes. This data can be combined in order to assess if a subject has a health problem. Or it can be stored in a medical database together with other data such as a magnetic resonance image and the results of a blood analysis. All these data can be later used in order to base the health assessment on more information sources. Lastly we can take the ubiquitous digital camerainto account, where red, green, and blue image channels are captured from a scene.

You could say your digital camera carries out data fusion. But it is not that easy. The colour channels are integrated in the colour image, but not fused. As in the case of nuclear or music fusion, I think we have to obtain something different from the fused parts in order to appropriately use the term data fusion. I consider this to be one of the principles of data fusion. So we could talk about data fusion if we display a grayvalue image, that can be achieved by adding the pixel values of the three channels and dividing by 3. However not everyone shares this view and several practitioners talk of data fusion when what they mean is really data integration. This often happens in database descriptions. For me there is a clear difference between the two topics, although it is clear that there is no data fusion without data integration.

How to fuse data

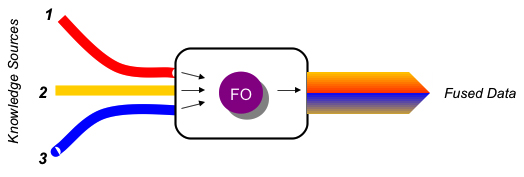

At the base of data fusion we have the so called fusion operators. This is the element responsible for the generation the fusion result. This is the element that, for instance, transforms the distance sensor data streams into the distance estimation that drives the acoustic beep alert in the car. Or in other words, this is the element in a processing chain that transforms the several data items into the fused data.

The simplest fusion operator is the sum, and it might be the oldest one as well if the Ishango bone interpretation is right. Some other ones are the minimum, the average, as in the case of the operator that transforms a color image into a grayvalue one, or the product. More complex operators have funny names like copulas, which by the way some people think to be behind the world economic crisis of 2008, or fuzzy integrals. All of them share the feature of getting several inputs and delivering a unique data stream, a stream that is expected to be more than the sum of the parts. This is when the application of data fusion really makes sense.