Since its first edition in 1994 Sonar Festival is definitely one of the electronic music festivals you cannot miss. For its sixth consecutive year in Barcelona, Sonar+D organized its famous hackathon along with the Music Technology Group of the Universitat Pompeu Fabra. Sonar Music Hack Day (MHD) is a hacking session in which participants will conceptualize, create and present their projects. Music + software + mobile + hardware + art + the web. Anything goes as long as it’s music related. The challenge is to develop disruptive technologies useful for music composition and performance based on non-conventional interfaces. This year the hacking was focused on wearable technologies. 100 hackers were selected to participate and during the first two days of Sonar+D they implemented new software and hardware prototypes for over 24 hours. Companies participating in this Hackaton provided APIs, SDKs and their sensors and devices to hackers willing to use them for their sonification projects. For the third year Starlab and Neuroelectrics sponsored Sónar MHD providing to hackers Enobio electrophysiological sensors and the necessary tools and support for EEG\ECG\EMG feature calculation and streaming.

This year two participants of the Brainpolyphony consortium, Jordi Sala from CRG/Mobility Lab and myself from Starlab, have participated and developed a hack on body sonification inspired in the brainpolyphony project, our hack was named ‘One Man Band’. We aimed to extract electrophysiological and movement measures from one person wearing a wireless sensor and convert them into music. We wanted each measure to be mapped into an independent instrument or tune so one of us could act as a DJ mixing them in order to create a novel musical interface between the signals from the body that are converted into sound and a DJ.

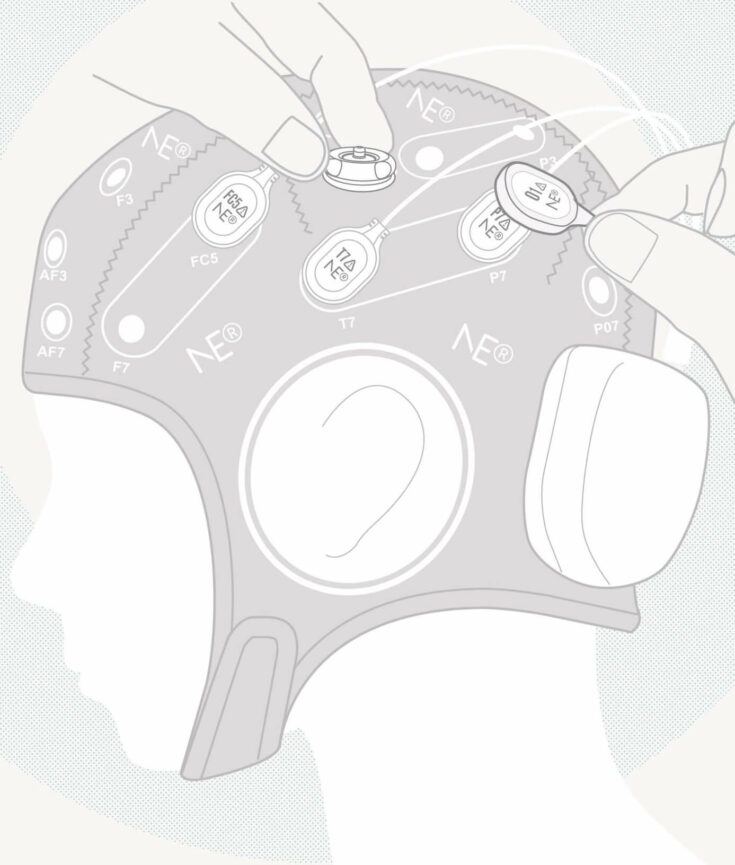

The hack used Enobio in its 8 channel version for the measurement of brain activity (EEG) via 6 electrodes placed on the surface of the scalp, heart activity in the an electrode placed on the left wrist, muscular activity in an electrode placed on the biceps and head movements using Enobio’s integrated accelerometer. All this information was streamed via Bluetooth to a piece of software constructed over the Enobio API that calculated real-time elctrophysiological features. In the brain electrical activity we computed valence and arousal emotional features. Arousal is a measure of how strong is the emotion while valence tells us if this emotion is positive or negative. Based on valence and arousal measures, complex emotions (fear, happiness…) can be mapped as we can see here. From the heart we detect the heart-rate (how many times the heart beats per minute), the heart-rate-variability (variation in the time interval between heartbeats), and when the heart beats. Heart-rate and heart-rate-variability are also arousal measures. The electrode placed in the arm measures the muscular activity so we can detect when a muscle is active and when it is not. Finally from the accelerometer it is possible to measure head movements and defining a threshold when a large head movement occurs.

All this information is sent via TCP/IP to a PD patch that is in charge of the sonification. When the heart beats a drum is played, depending on the level of the heart-rate and heart-rate-variability the pitch of a tune changes making the tune faster or slower, when the system detects that the muscle has been activated (for example when rising the arm) a guitar solo is played, valence and arousal levels are mapped into complex sounds, the accelerometer into a tone whose frequency is changed and finally when a large movement is detected the bongos are played. If the user wearing Enobio is able to self-regulate his emotions, he will be able to change the music and sounds coming out of his body. If the user wants he also can interact by moving his head or raising his arm. In the following video you can have a look at the presention of our hack: ‘One Man Band’, mixing music coming out of your body!

EL MUNDO: http://www.elmundo.es/economia/2015/07/08/559d0a1822601dd5208b45a0.html

EL ECONOMISTA: http://www.eleconomista.es/salud/noticias/6855062/07/15/Desarrollan-el-primer-prototipo-que-transforma-las-emociones-en-sonidos.html