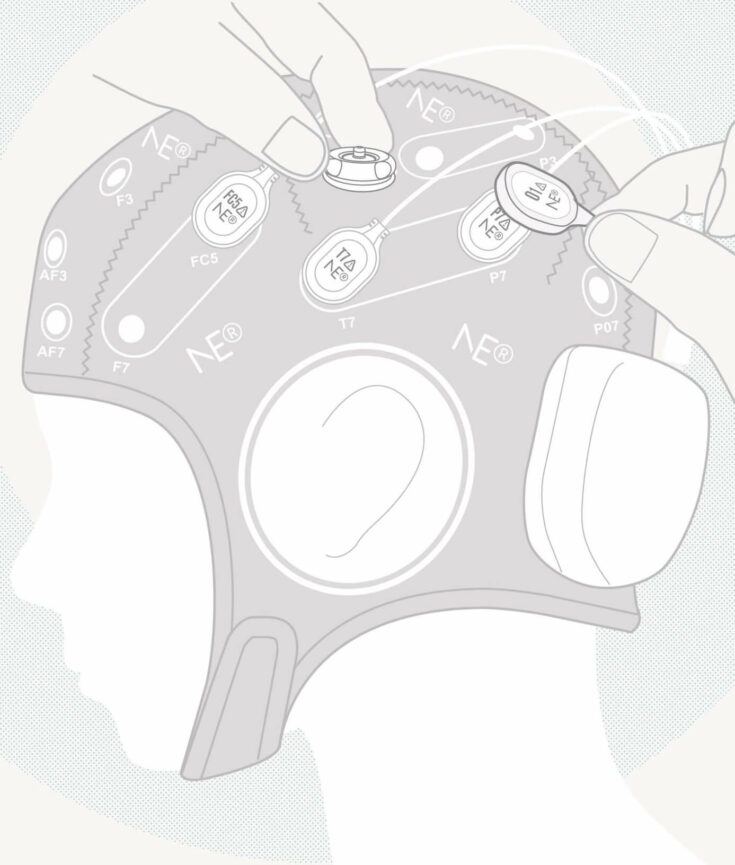

Recently my colleagues Javier Acedo and Marta Castellano posted about ExperienceLab, Neurokai’s platform for characterizing the emotional response of users, and its very last presentation in an art exhibition discussing technology-driven evolution of humans. As you might already know ExperienceLab gathers data of a user when perceiving an external stimulus and associates an arousal and a valence values to this perception process. The platform is based on Enobio and other sensing devices for the simultaneous measurement of the electrical brain, heart, and skin responses, of respiration rhythms, and of the facial expression. Since several emotional states can be defined in the arousal-valence two-dimensional space, the system can associate an emotional response to the stimuli. This founds great applications for user experience analysis, man-machine interfaces, and neuromarketing among others. I am trying to give today 8 reasons why such an emotional characterization should be multimodal, i.e. should include data from diverse sensors.

The first reason is conceptual and lies on the so-called sensor gap, which we have already mentioned in older posts on data fusion. When sensing reality sensors are sensitive to particular aspects of reality, e.g. sensitivity to particular visible wavelengths in imaging camera sensors, or to particular frequencies in audio microphones. The only way of getting a more complete representation of reality is to combine the sensitivity of the different sensor units. This conceptual reason translates into practical ones in the case of the affective multimodal characterization.

The skin response is the more validated modality for affective characterization. Already in 1888 the neurologist Charles Féré demonstrated the relationship between skin response and emotional response in subjects. Indeed Jung used electrodermal activity (EDA) monitoring in psychoanalysis praxis. But EDA, which is modernly known as Galvanic Skin Response (GSR), can be affected by external factors like humidity and temperature. So one needs to avoid the external influence on the outcome.

Therefore the first affective multimodal system was born. The polygraph combined GSR with other physiological measures, namely heart rate, respiration and blood pressure. Invented in 1921 the idea was to get rid of the environmental factor dependence as much as possible. Although the utility of polygraphs for lie detection has not been proven, all the modalities included have been extensively validated as arousal measures in the scientific literature. However all the former sensing modalities share two common problems. They react too slowly to the external stimuli. This is crucial when implementing real-time systems or for avoiding too long data collection protocols. Moreover they are exclusively capable of characterizing arousal, whereas they leave valence out of scope.

The more rapid reaction backs up the multimodal extension. This is our fourth reason. Both the facial expression and the brain electrical activity seem to present a lower latency than the other modalities. The Heart Rate Variability response time is estimated in 6-10 seconds. The GSR response is even slower upon what we could observe in different ExperienceLab applications. On the other hand facial expressions can be detected with computer vision systems in one second.

Furthermore differences in the brain activity in response to emotional stimuli have been already detected in event related potentials that are defined in the millisecond domain.

Fifth not only the reaction time of the EEG modality is larger but also its capability of detecting subtle changes in mood. While facial expression recognition systems have been mostly trained with extreme facial expressions, EEG-based alternatives have been developed with validated and real-world stimuli. It is not easy to generate emotional responses in lab conditions, but scientific evidence proves the performance of EEG-based measures for characterizing arousal and valence. Next time you see someone monkeying in front of his laptop camera, you know he is training the facial expression recognition module. A recent paper targets concretely this problem.

EEG is also a crucial modality for characterizing valence. No other modality is able to characterize valence. We have formerly commented on the target of polygraph-like modalities, which is arousal. Facial expression recognition on the other hand detect and classify basic emotions, but not valence, which is one of their components, in an isolated manner. This is one of the most important assets of EEG technology for affective characterization.

The seventh reason for choosing a multimodal setup for affective gathering relates to the facial expression modality in spite of its outcome limitation. Facial expressions are the more natural way for humans to non-verbally communicate affects. It is the natural evolution choice and therefore a reliable one. Moreover it can serve the practical and quick validation of the system. The videos used for facial expression recognition, can be used offline to analyze the user reaction in a very natural and straightforward manner. Nevertheless faces can be easily faked.

Faking electrophysiological measurements is not easy. This is the last motivation for our multimodal advocacy. Self-regulation of electrophysiological indices is doable but with some extensive training. Not for the heart rate, but for the other modalities this is extremely difficult, especially for the brain activity. Summarizing we can state that the multimodal characterization of affective reactions offer a more validated alternative, with quicker reaction times, and a more complete and reliable emotional characterization than the unimodal alternatives on their own. This has motivated our choice for inclusion in ExperienceLab. Do you want to test it?