The superior performance of deep learning relative to other machine learning methodologies has been commented in several forums and magazines in recent times. I would like to post today on three reasons that, in my opinion, are the basis of this commented superiority. I am not the first to comment on this issue [1], and for sure I won’t be the last. But I would like to extend the discussion by taking into account the practical reasons behind the success of deep learning. Hence if you are looking for its theoretical background you would do better to look for it in the deep learning literature, where the “Hamiltonian of the spin glass model [2]”, the exploitation of compositional functions to cope with the curse of dimensionality [3], their capability to best represent the simplicity of physics-based functions [4], and the flattening of the data manifolds [5] have been proposed. All of these are related, but I would like to comment here on those practical aspects that turn deep learning into a useful technology to tackle some practical classification problems and applications. But not all problems and applications. Deep Learning methodologies appear today as the ultimate pattern recognition technology, as formerly Support Vector Machines and Random Forests,. But based on the No Free Lunch Theorem [6] there is no optimal algorithm for all possible problems. Therefore it is always good to keep the evaluation of the classification performance [7] in mind. Performance evaluation constitutes a basic stage in machine learning applications, and is directly linked with the Deep Learning success as described below.

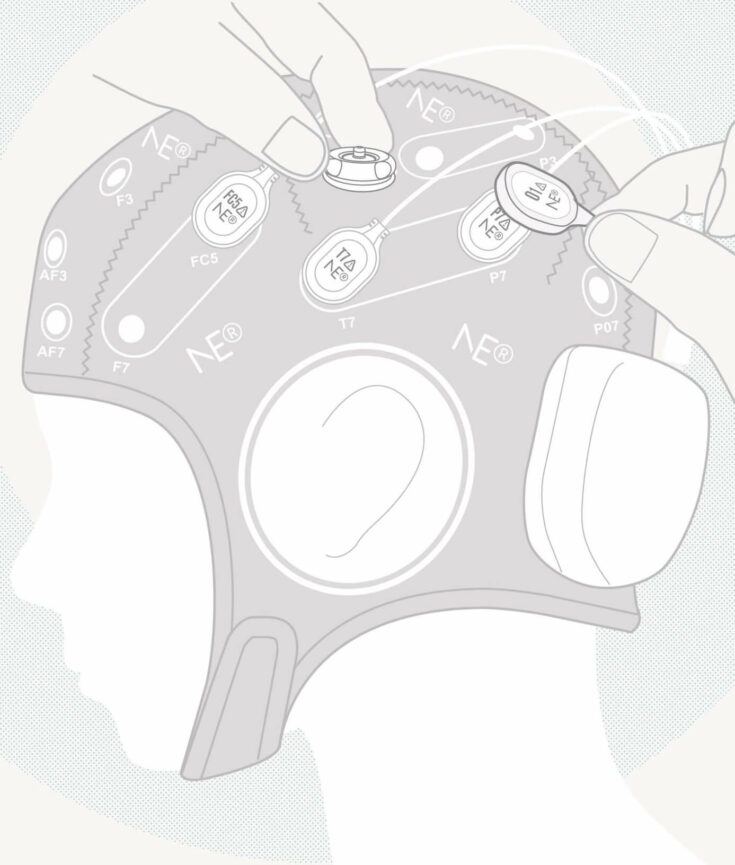

The first important reason for the Deep Learning success is the integration of feature extraction within the training process. Not so many years ago, pattern recognition was focused on the classification stage. Thus feature extraction was treated as a somehow independent problem. It was a part very much based on artisan manual work and on expert knowledge. You used to invite an expert on the topic you wanted to solve to form part of your developing team. For instance, you should count on an experienced electrophysiologist if you want to classify EEG epochs, or on a graphologist if you want to recognize handwriting. This expert knowledge was used to select the features of interest in a particular problem. In contrast Deep Learning approaches do not set up a priori the features of interest. Deep Learning methods train the feature extraction and the classification stages together. For instance a set of image filters or primitives is trained in the first layers of the classification network for image recognition. This was a concept already proposed in the Brain-Computer Interface community some years ago, i.e. Common Spatial Filters (CSP) were trained in order to adapt the feature extraction stage to each of the BCI users.

Moreover Deep Learning has succeeded in previously unsolved problems because it encourages the collection of large data sets and the systematic integration of performance evaluation in the development process. These are two sides of the same coin. Once you have large data sets, you can not just conduct the performance evaluation through a manual procedure. You need to automate the process as much as possible. The automation implies the implementation of the cross-validation stage, and therefore its integration in the development process. Large data set collection and performance evaluation have found very good support as well from the popularization of data analysis challenges and platforms. The first challenges and competitions were organized around the most important computer vision and pattern recognition conferences. This was the case, for instance, with the PASCAL [8] or the ImageNet [9] challenges. These challenges allowed for the first time to count on large image data sets, and most importantly, with associated ground truth labels for systematic performance evaluation of the algorithms. Furthermore the performance evaluation was done blind on the test set ground truth. So, it was not possible to tune the parameters to boost performance. The same challenge concept was then applied on data analysis platforms, of which the most popular is Kaggle [10] but it is not the only one, e.g. see DrivenData [11], InnoCentive [12]. The data contest platforms make use of the same concepts: they provide a data set for training and a blind data set for testing, plus a platform to compare the achieved performances among different competing groups. This has been definitely a good playground for data science in general, and particularly for Deep Learning.

.

.

The last thought I would like to share with you is closely related to the former ones. None of the innovations mentioned above would have been possible without technology development. A decrease in memory and storage prices [13] allows storing data sets of ever increasing volume. The well-known Moore’s law describes the terrific simultaneous increase in computational power. Lastly the explosion of network technologies have definitely democratized the access to both memory and computational power. Cloud repositories, and High Performance Computing are allowing an exponential increase in the complexity of the implemented architectures, i.e. the number of network layers and nodes taken into account. So far this has been demonstrated as the most successful path to follow. For the moment …

[1] http://www.kdnuggets.com/2016/08/yann-lecun-3-thoughts-deep-learning.html

[2] https://arxiv.org/pdf/1412.0233.pdf

[3] https://arxiv.org/pdf/1611.00740v2.pdf

[4] https://arxiv.org/abs/1608.08225

[5] http://ieeexplore.ieee.org/document/7348689/

[6] https://en.wikipedia.org/wiki/No_free_lunch_in_search_and_optimization

[8] http://host.robots.ox.ac.uk/pascal/VOC/

[9] http://www.image-net.org/challenges/LSVRC/

[11] https://www.drivendata.org/