Have you ever imagined how many sensors, signal processing and subtasks it takes for the body to process and execute a success command?

The recent paper by Crea et al (Scientific Reports 2018) represents an exciting step towards the development of practical brain-computer interfaces (BCI). In this case, the development is designed for patients with large deficits in arm and finger control that affect daily activities such as drinking or eating – which we usually take for granted. Such impairments arise from spinal cord injuries or stroke, for example, and have a very large impact on quality of life.

The BCI in this paper is a complex system that combines a whole-arm exoskeleton designed to integrate EEG and EOG (electrical signals from the brain and eye movements) for reaching and grasping control. The main challenge is to individualize the system while making it user-friendly, safe and effective.

As the authors point out, recent work with invasive measurements (e.g., see this and this) have delivered impressive results. Implantation, however, has its risks and morbidity. The authors in this paper (led by Surjo Soekadar) have demonstrated in previous work (with our admired advisor Niels Birbaumer) the feasibility of asynchronous EEG/EOG BCIs for grasping. By this, we mean interfaces that can be used at any time, without synchronization to timing cues (which if present facilitate enormously the algorithmic end of things, although reducing significantly user experience).

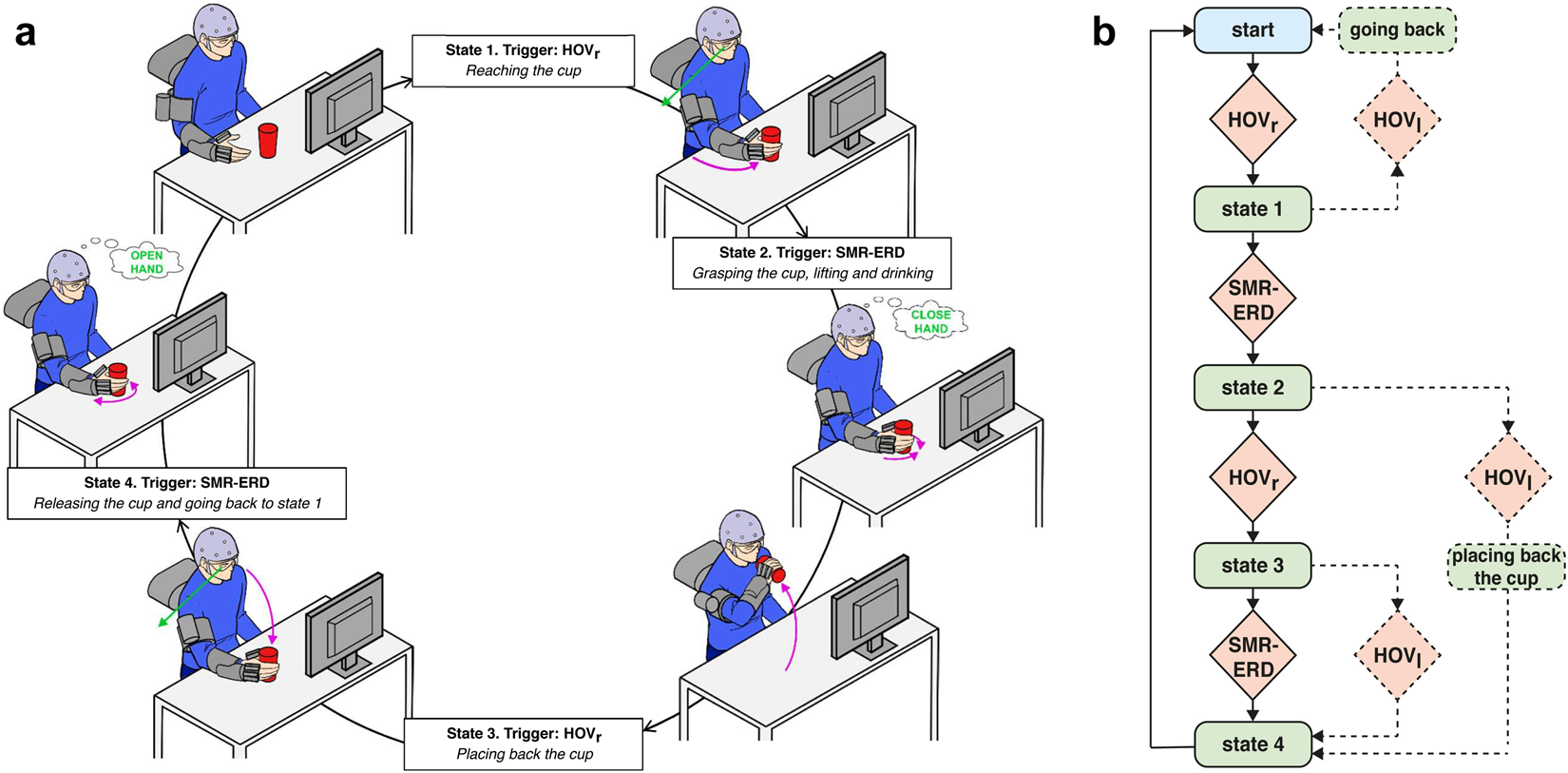

Crea et al. go beyond that one in two aspects. First, they extend the interface. In the authors’ words, “In contrast to a simple grasping task, operating a whole-arm exoskeleton, for example, to drink, involves a series of sub-tasks such as reaching, grasping and lifting.” This extension translates into a much bigger space of possible actions, with a large number of degrees of freedom. The requirements for information transfer bandwidth rapidly explode. To handle this, robotics comes to the rescue in the form of a vision-guided autonomous system. Similar processes occur in the brain, where “automatized” tasks are not consciously controlled. In other words, the system represents a fusion of human and machine intelligence connected by EEG and EOG signals. Robot and human collaborate for the task of reaching and grasping a glass of water, drinking, and placing it back on the table. The human is on top of the command chain, initiating the reaching action (via EOG signals) and grasping (via EEG) – see this figure below.

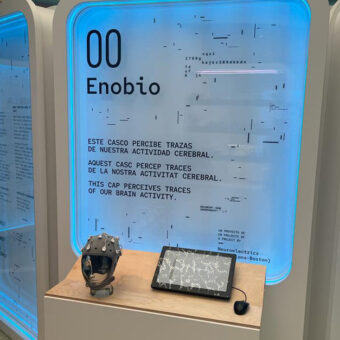

As for EEG/EOG, the authors use Neuroelectrics’ Enobio wireless system using 6 solid gel electrodes. Using BCI2000, these signals were translated into commands for shared control of the exoskeleton with the robotic vision-guided system. The latter was used to track and reach the object to be grasped. The overall system is quite complex, involving IR cameras, Enobio, exoskeleton components (hand-wrist and shoulder elbow) and a visual interface. Most of the communications across sub-systems was mediated by TCP/IP (e.g., Enobio’s software, NIC, can stream EEG data continuously over a network).

The end result will remind sci-fi fans of the concept of a cyborg, or more precisely of a Lobster (made not by using internal implants, but by using a smart external shell as the one worn by Iron-Man). It is the fascinating meld of human and artificial intelligence with robotics that makes this and similar developments exciting, as they bypass the bandwidth limitations of BCIs through shared control with an exocortex. As all the technology elements in such systems evolve (sensors, signal processing, AI, robotics) and coalesce, their impact will be enormous and probably spill over to consumer applications. After all, all you may really want is to drink that glass of water, not worry about the million details that actually go into realizing that stupendous feat.