Last December the 10th in Barcelona we were happy to present the first Brainpolyphony Orchestra Concert. Brainpolyphony is a project born thanks to the collaboration of CRG, University of Barcelona and Starlab with the goal of giving voice, or in this case music, to people with severe communication difficulties.

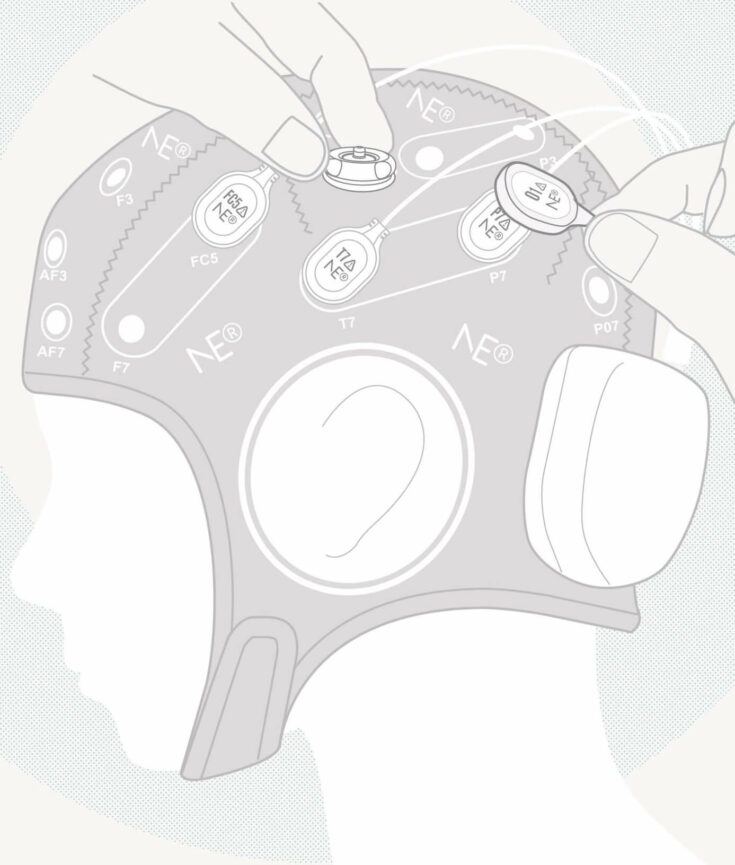

Patients with neurological diseases such as cerebral palsy often experience severe motor and/or speech disabilities that makes very difficult (and in some cases even not possible) for them to communicate with their family and carers. We believe that a possible solution to overcome these communication difficulties is to develop a Brain Computer Interface (BCI) system that uses robust reliable electroencephalographic patterns. Since emotions play a crucial role in daily life of human beings, we believe that emotional changes monitoring would be the best communication approach. The platform works as a dictionary of emotions, detecting patterns from emotional states (rythms from electrical brain activity) and translating them into (emotional) sound; thus making the user/caregiver a new tool to understand those signals in a direct way.

Data sonification is the process of acoustically presenting information. Sonification intends to take profit of specific characteristics of the human sense of hearing (higher temporal frequency and amplitude resolution) compared to vision. Most of the work done in rehabilitation using sonification techniques has been conducted among visually impaired people, however the potential of sonification for offering channels of communication to persons with restricted capacities has great possibilities in the field of assistive technologies. Following this line Brainpolyphony uses sonification of emotions as communication vehicle for cerebral palsy patiens. In its musical composition module, emotional information is used to create in real time a unique theme, following musical rules, that reflects the user emotional state. In example, if the person using the Brainpolyphony system starts feeling sad, the music coming out of the platform will be unequivocally gloomy and melancholic.

Using the first prototype three volunteers with cerebral palsy: Marc, Merce and Pili came onto stage. Ivan Cester, our composer, specialized in movies sound-tracks and jingles, created a new online musical composition (using Max Studio) that changed the melody upon the received level of real-time streamed volunteer’s emotional information. The three volunteers were looking at a set of videos with strong emotional content in order to make their emotional signature change during the performance. As result the three of them created a unique dynamical musical composition based on their measured emotions. Sant Cugat Young Orchestra improvised with them creating an incredible experience!.

But it is better to see it in action. In the following videos you can see (and listen to) Marc, Pili and Mercé who are looking at the videos with the orchestra behind them. However there is still a long way to go as this was a proof of concept of the very first prototype. We hope to develop in the following years a reliable, customized, affordable solution that could improve the quality of life of many people with severe communication problems.